Update on Root Cause and Prevention of Similar Incidents

Last updated: 15:05 CET 12.08.2025

Statement from Egil Anonsen, CPTO at Nofence

Hello, my name is Egil Anonsen, CPTO at Nofence.

I wanted to give you more information about the recent incident that took place last week. I’ll do that by focusing to three main areas:

- First go through the timeline and sequence of events.

- Secondly give some information about our current understanding of the problem, and hypothesis of the root cause behind this incident

- And finally, look forward a bit and give some insights into what we can do to prevent this from happening in the future.

Timeline and sequence of events

So let me start off with the timeline and what actually took place.

Last weekend we saw an slight increase in pulses on selected collars, namely the version 2.2 and older, what we typically call Legacy devices, in our internal monitoring systems, and also an increase in support tickets. We do have comprehensive monitoring and automated alerting for our fleet, with engineers that are on-call and notified also outside normal office hours and during the weekend.

By Monday morning August 4th, it became evident that this was more than just a few isolated incidents, and that it was affecting a larger part of our fleet. As soon as we understood this was a major incident, we followed our internal escalation process, and gathered all available resources into a task force. The task force has since worked day and night trying to understand the issue, with the top priority to mitigate the excess pulses as soon as possible.

As part of the escalation process, we then informed customers later the same day that they should consider expanding the pasture, avoiding pastures close to buildings or removing the pasture all together if possible for affected collars.

It quickly became clear that it was affecting only the version 2.2 of collars and earlier, which were collars sold up until the start of 2023. It was also clear that both Cattle and Sheep-Goat collars were affected in a similar way, and it also affected collars in all geographical markets. However the vast majority was in Norway, simply because that’s where we sold most of our collar up until 2023.

We estimate that approximately 5% of our fleet of collars were affected by this incident.

I would also like to mention that the vast majority of our fleet is made up of version 2.5 which started shipping in 2023, and these collars were unaffected by this incident.

All our collars are remotely upgradable, both the 2.2 collars and earlier, and of course the most recent 2.5 version. On Tuesday August 5th after further analysis and testing, we rolled out two new configurations to the fleet, including disabling power saving mode, however none of them had the effect we wanted.

Then finally on Wednesday August 6th we rolled out another configuration around 14:00 in the afternoon to 20% of the affected fleet. We quickly saw a significant improvement, and then decided to roll this configuration out to the entire fleet. Soon after this we saw that all collars started to recover, and the number of pulses returned to pre-incident levels.

To err on the side of caution, we wanted to see 24h of stable operation before informing customers that it was now safe to use the collars again. As a result, we communicated this later on Thursday after 24 of normal collar activity.

Over the last few days we’ve since also re-enabled power saving mode on all collars, so now the collars should behave as well as they did pre-incident.

Hypothesis on root cause

I will now discuss our current understanding of the root cause although it’s important to say we still don’t understand it fully, and we are still working hard to get to the bottom of this.

The 2.2 version of the collars are based on a design from several years ago, and as a result are using technology and electronics that have since been improved. The 2.2 version uses a Global Navigation Satellite System (GNSS) receiver from uBlox, one of the largest GNSS manufacturers in the world. But this GNSS receiver, called the Zoe M8, has some weaknesses and limitations. We have since then launched the new version 2.5 of our collar, which is using the new and improved GNSS receiver called MIA M10.

There are 4 major GNSS constellations in use today: GPS, Galileo, Glonass and Beidou. The Zoe M8 has some limitations which means that it’s only capable of tracking two of these constellations simultaneously while still doing it at a low power. Developing a product like a virtual fencing collar, is a continuous exercise in trying to balance the different parameters to make the best overall product. Therefore the 2.2 collars use the GPS and Glonass constellations as a tradeoff between location accuracy and power consumption.

Normally, these collars see around 16 satellites, which in most cases gives a very reliable position. Yet, during the incident, we saw that this would drop to around 11-12 satellites. When the collar sees less satellites, it will typically experience a less reliable position. This can then cause the GNSS position to drift, misleading the collar to think the animal is outside the pasture when it’s really not, and then incorrectly start the correction sequence with audio and pulse.

The 2.2 collars also use a feature called AssistNow Offline (also known as ANO), which is useful after the collar has been in power saving mode, which takes effect when the animal is not moving much, in order to extend the battery life of the collar. When the animal then wakes up and start moving again, the GNSS receiver is turned back on, and ANO helps predict where the GNSS satellites will be. As a result, the collar should obtain a reliable position faster when coming out of power saving mode as opposed to if has to start from scratch. This duration is also known as time-to-first-fix, or TTFF, and is a parameter we want to keep as short as possible.

As mentioned earlier, Glonass is one of the constellations used by the legacy collars. The Glonass constellation put one satellite under maintenance that correlates well with the point in time when the incident took place. We currently believe the ZOE-M8 didn’t handle this event properly and lost its ability to track all available satellites and thus its ability to give an accurate location was reduced.

In comparison, the version 2.5 collar which has a state-of-the-art receiver, and is using both GPS and Galileo simultaneously, and typically sees around 25 satellites. This means it’s much more resilient against such GNSS disturbances as we just experienced.

The fix rolled out Wednesday last week consisted of disabling this ANO feature for our collars. This means that the collars will not download a file with predictions of where satellites will be, but rather get this information from the satellites during normal operation. We’ve seen from early analysis of the fleet over the weekend, that this change may actually have lowered the TTFF for in most scenarios, which means that the collar currently should behave just as good as pre-incident.

How to prevent this from happening in the future

Finally, now that the incident has been mitigated, focus shifts to properly understanding the root cause and implement mitigating actions to reduce the likelihood of a similar type of incident happening again. We are also working close with our GNSS vendor uBlox to root cause this issue and implement the most effective corrective actions. This is the most important track, in order to find the most reliable and effective improvements to implement in the future, we need to understand fully what went wrong.

It’s important to say that we do already have algorithms running on the collar in order to handle GNSS disturbances and thus not give the animal unintended pulses. We should not simply accept that fewer satellites will result in more unintended pulses, and this incident has made it clear that these algorithms needs to be further improved.

In addition to reporting the position every 15 minutes, the collars also reports a lot of meta-data that we used to train and improve our algorithms further. We are now looking into which of these parameters and algorithms we can use to be able to detect such GNSS disturbances, and far more gracefully handle them to avoid these unintended pulses. One example could be to make more assumptions about animal behaviour and how they move, and if suddenly the position is far outside the fence, concluding that is most likely is a GNSS disturbance and thus filter that position away.

We are also exploring the use of different GNSS constellations, even if some of these will use significantly higher power consumption. These configurations can then quickly be deployed to our fleet as a temporary mitigating solution if a similar incident were to occur in the future.

We are also considering exposing more configurations to the end user if an incident of similar kind were to happens again, like potentially disabling the pulse while keeping the audio warning on a collar-by-collar basis, but the details around this is still being worked through.

We will of course also re-visit our internal metrics and alert systems, to ensure that a similar incident will be caught as early as possible, so that the information can be given as quickly and we can perform mitigating actions on the fleet to minimise the overall impact.

Finally, I would again like to express our deepest apologies for this incident, and we will do what we can to make sure this will not happen again.

Thank you.

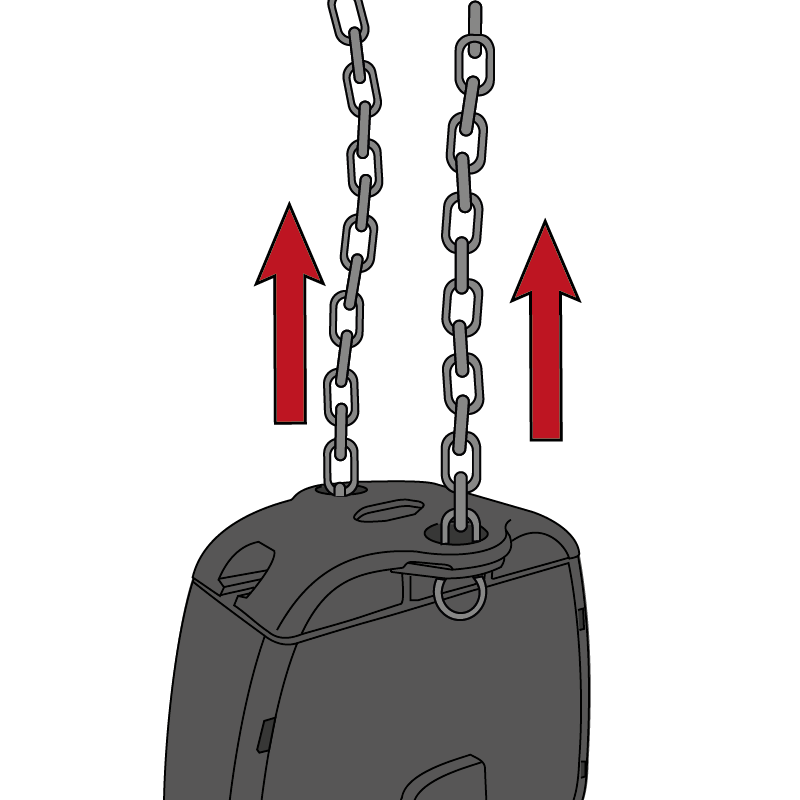

1 Remove the chains.

2 Loosen the screw and remove it

.

3 Slide the old bracket off.

Note: The gloves may be needed if it's hard to remove.

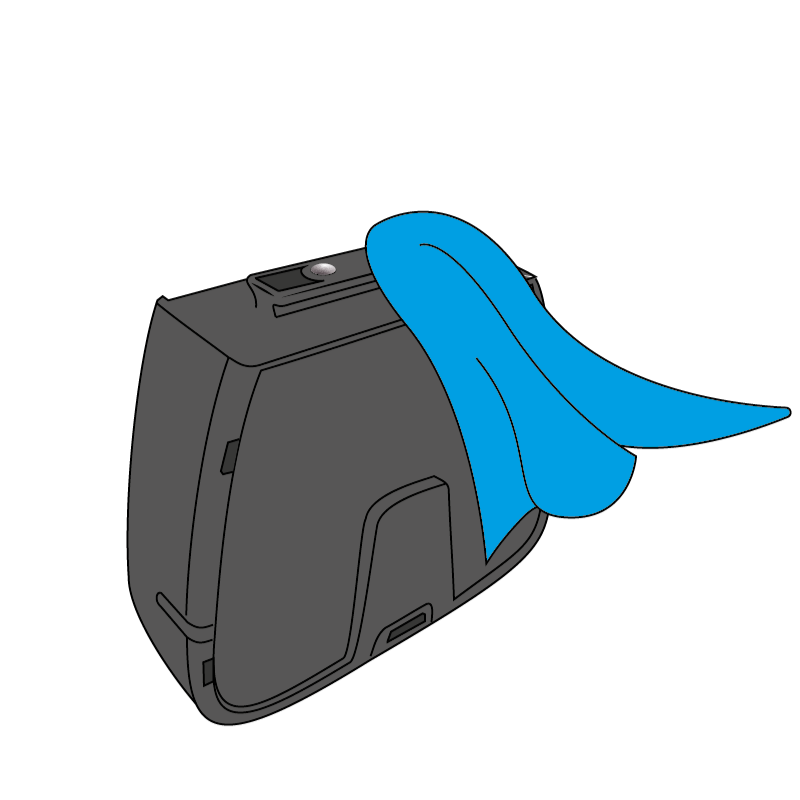

4 Clean collar with a moist cloth and allow to dry.

.png)

! Make sure that the area around the metal contact pins is clean.

5 Slide the new bracket into place.

6 Insert the screw back in.

! Avoid tightening too hard. This is important to avoid damage to the new bracket and collar.

7 Attach the chains to the new bracket.

Do you have a voucher?

If you have received a voucher, you can redeem and order a free replacement brackets from the internal store. You must log in to your account pages and be the primary account holder to order.

Note: The voucher only covers the number of brackets you have applied for.

Is the problem still not solved?

If the above steps did not resolve the problem with the collar, you can contact Nofence customer support by clicking the button below.

Contact us:

United Kingdom & Ireland

+44 (0)1952 924044

Opening hours

Mon-Fri

9:00 - 17:00